Mon, 23 January, 2023

“Joyous!". That is how Hesham Yusuf described getting the news that he had successfully passed his PhD defence.

Hesham, an NSIRC PhD student supported by Lloyd's Register Foundation (LRF), TWI Ltd and the University of Sheffield, said that passing his PhD Viva was as an "unforgettable" experience.

His research was conducted under supervision from both academia and industry, comprising of Prof George Panoutsos and Dr Hua-Liang Wei from the University of Sheffield, and Dr Kai Yang, technology expert from TWI Ltd.

“I am over the moon to have passed my PhD. Working towards this significant milestone has been exceptionally rewarding,” said Hesham. “I have gained invaluable knowledge about the industry and developed a variety of skills. Throughout my journey, I have had the privilege of working with some of the most experienced researchers in my field. All in all, it has been an unforgettable time and well spent. I would like to thank my sponsors for this incredible opportunity. I am deeply indebted to my supervisors, Kai Yang and George Panoutsos, for their tremendous support throughout. Special thanks to all the friends I have made along the way who have made this endeavour even more enjoyable. I would like to dedicate my accomplishment to my family without whom you would not be reading this right now."

Before enrolling in the NSIRC PhD programme, Hesham completed a Bachelor's in Systems and Control Engineering and a Master's degree in Advanced Control and Systems Engineering from the University of Sheffield.

Explainability in Advanced Manufacturing: Leveraging the Interpretability of Multi-Criteria Decision-Making (MCDM) and Neutrosophic Logic

The research from Hesham's PhD focuses on investigating a new class of machine learning (ML) models, interpretable ML, for use in pipe inspection automation. Interpretable ML is also commonly referred to as explainable AI/ML. The interpretability of a model refers to the human understandability of the various components.

Explainability refers to whether interpretable information can be when converted into an explanation.

The study explores how an explainable ML model will function in an industrial setting when used for classification.

The research used the non-destructive testing of high-density polyethylene (HDPE) butt-fusion welds.

Results from Hesham's study can be used as a potential stepping stone for developing technology-ready explainable frameworks for practical application within industry.

Importantly, the research has made a significant contribution to academia by creating a framework for extracting graphical and textual explanations for the model result, developing a data-driven interpretable classifier based on MCDM and fuzzy logic, extending the classification framework with neutrosophic logic for enhanced explainability, and applying the frameworks to a real-world industrial case study; HDPE butt-fusion pipe weld inspection.

Dr Kai Yang from TWI Ltd said, “Hesham’s PhD work demonstrated his ability to come to a detailed understanding of explainable AI. He was responsible for proposing and developing the explanation frameworks for industrial inspection datasets, and his work provided a proof-of-concept implementation with valuable insight into the decision-making process."

Hesham's research has been published in journal from IEEE and Springer. To find out more, visit his PhD Profile.

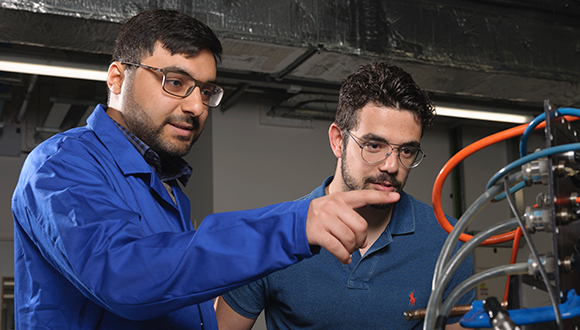

Hesham Yusuf (left) in the NDT lab at TWI Ltd. Photo: TWI Ltd

Hesham Yusuf (left) in the NDT lab at TWI Ltd. Photo: TWI Ltd